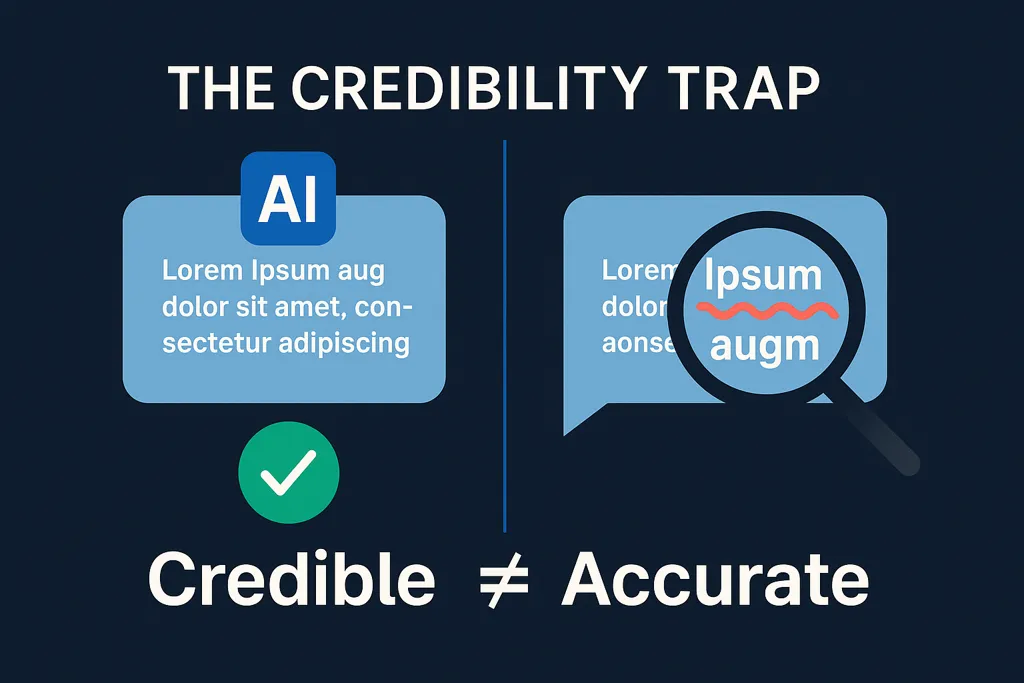

AI has a knack for playing dress-up. With a polished sentence here and some confident phrasing there, it can make almost anything look like the truth. The problem? Looking credible isn’t the same as being accurate. And in the workplace, the gap between those two concepts can be costly.

One of the reasons ungrounded AI can be dangerous is that the wrong answers it sometimes provides very often feel right. This makes it very easy for employees to act on incorrect information unwittingly. Suddenly, trust begins to erode, and risks begin to multiply. That makes credibility without verification one of the most dangerous illusions in the current AI landscape.

Why Human Judgment Still Matters

The future of AI won’t be defined by scale or model size. It will be defined by the role humans choose to play. Expert curation, oversight, and context-setting remain irreplaceable. Even the best AI system needs a trusted knowledge base and human judgment to decide what belongs in it, how it’s applied, and where its limits lie.

This is why Verified Expert Knowledge (VEK) matters. It anchors AI in rights-cleared, expert-reviewed insights. Equally important, it demonstrates the principle that humans must guide what knowledge gets elevated and how it’s used. AI can scale access. Only humans can safeguard meaning.

As Beena Ammanath argues in Trustworthy AI, transparency and explainability are the beating heart of ethical AI; robustness and safety in real-world conditions build confidence; and accountability must remain human, not algorithmic. These are governance choices, not just technical afterthoughts, and they rely on clear and vigilant human oversight.

From Replacement to Partnership

The misconception that AI can replace human expertise is giving way to a more sustainable view: AI as an augmented decision partner. Consider a leadership Copilot that doesn’t just generate content but delivers explainable, values-aligned frameworks. Or a wellbeing agent that draws on trusted, evidence-based knowledge, not unvetted internet posts. These are tools that extend human judgment rather than bypass it.

The distinction matters. AI without human guidance is speed without direction. It’s a remarkable engine to power your vehicle, but you must make sure capable human pilots remain at the wheel.

Governance, Not Just Guardrails

History is a useful teacher here. Verity Harding’s AI Needs You traces how societies built inclusive governance around prior breakthroughs – from IVF to the early internet – by combining leadership with public participation. The lesson for AI is clear: ethical direction comes from interdisciplinary input and democratic processes, not from technical prowess alone. In practice, that means:

- Interdisciplinary review of data, methods, and use cases: legal, ethical, and domain perspectives alongside engineering.

- Public‑facing transparency about where AI is used and why, especially in high‑consequence settings.

- Decentralized, accountable oversight that resists concentration of power and invites scrutiny.

Responsible AI is less a set of slogans than a way of working: patient, auditable, and open to challenge.

The Human Advantage

Responsible AI practices do not remove humans from the loop. Solid guidelines actually strengthen the loop with the best of both worlds: scalable automation and trusted human knowledge. Organizations that embrace this balance will build systems that employees trust, leaders rely on, and stakeholders respect.

The future of AI belongs not to those who automate the fastest, but to those who curate the wisest.

Learn more about how the getAbstract Connector for Microsoft 365 grounds AI in trusted knowledge: Trusted Business Knowledge for Your Copilot

Take a deeper dive in the getAbstract library…

Trustworthy AI by Beena Ammanath

AI Needs You by Verity Harding

getAbstract delivers Verified Expert Knowledge that cuts through the noise so your decisions are based on insight, not just information.